Value Prediction with Function Approximation

- tabular representation vs. function approximation

- function approximation can handle infinite state space (can’t enumerate through all states).

- linear function approximation

- design features ϕ(s)∈Rd (“featurizing states”), and approximate Vπ(s)≈θ⊤ϕ(s)+b , where θ should be fixed among features (in the following parts, b is ignored because it can be reached by appending a 1 to the feature vector).

tabular value function can be interpreted as feature vector ∈RS :

[0,⋯,0,1,0,⋯,0] where the position of the 1 indicates the state.

{: .prompt-info }

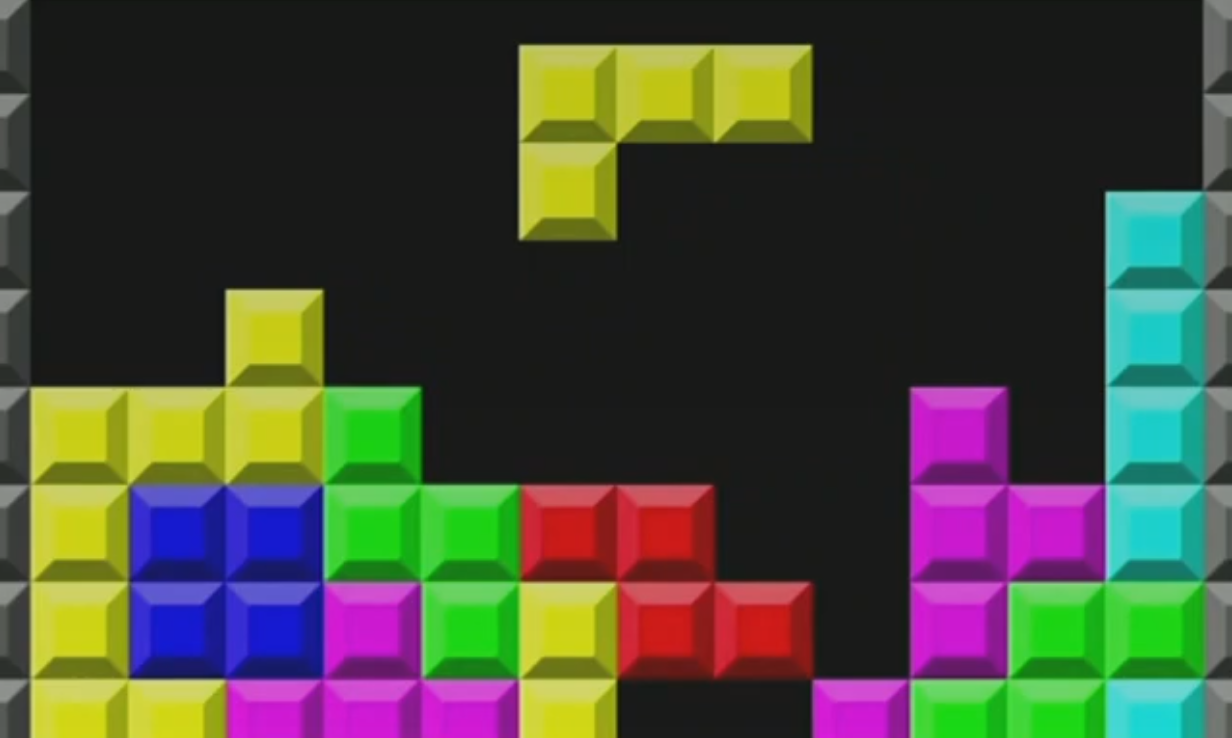

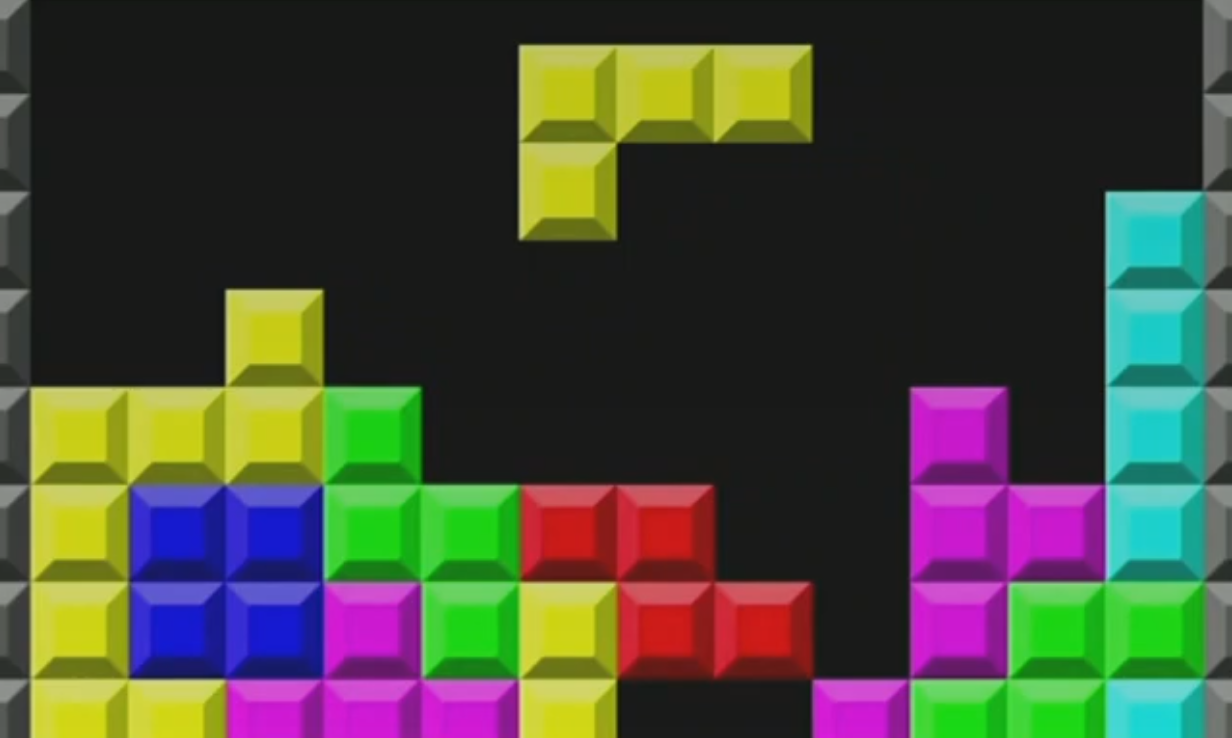

Example: Tetris Game

The state space is exponentially large: each block be occupied / not occupied.

Featurize: # of blocks on each column. In the example, the feature is (4454333⋯)

Monte-Carlo Vaule Prediction

Vπ(s)=E[G∣s]=f:S→RargminE[(f(s)−G)2]

Is a regression problem.

Why the expectation is the argmin? See here

{: .prompt-tip }

The same idea applies to non-linear value function approximation

More generally & abstractly, think of function approximation as

searching over a restricted function space, which is a set whose

members are functions that map states to real values.

E.g. a function space of linear value function approximation:

F={Vθ:θ∈Rd}, where Vθ(s)=θ⊤ϕ(s)

- typically only a small subset of all possible functions

- Using “all possible functions” = tabular!

- Equivalently, tabular MC value prediction can be recovered by choosing ϕ as the identity features \phi(\mathrm{s})=\left\\{\mathbb{I} \left[\mathrm{~s}=\mathrm{s}^{\prime}\right]\right\\}_{\mathrm{s}^{\prime} \in \mathrm{S}}

Find the function:

Vθ∈Fminn1i=1∑n(Vθ(si)−Gi)2

SGD: uniformly sample i and

θ←θ−α⋅(Vθ(si)−Gi)⋅∇Vθ(si)

Interprete Td(0) with Linear Approximation

TD(0) iteration is equivalent to

θ←θ+α(Gt−ϕ(st)⊤θ)ϕ(st).

Here θ is the tabular value function and ϕ is [0,⋯,0,1,0,⋯,0] , as mentioned here.

TD(0) with Linear Approximation

In TD(0), we do

V(st)←V(st)+α(rt+γV(st+1)−V(st)),

which, with all steps on t , gets

Vk+1←TπVk.

i.e.

Vk+1(s)=Eπ[r+γVk(s′)∣s]

Similar to Linear Approximation, rewriting expectation with a regression problem,

Vk+1(s)=f:s→RargminEπ[(f(s)−(r+γVs))2]≈Vθ∈Fargminn1i=1∑n(Vθ(si)−ri−γVk(s′))2.

And the SGD steps should be

θ←θ+α(Vθ(st)−rt−γVk(st+1))∇Vθ(st)